my thoughts on golang

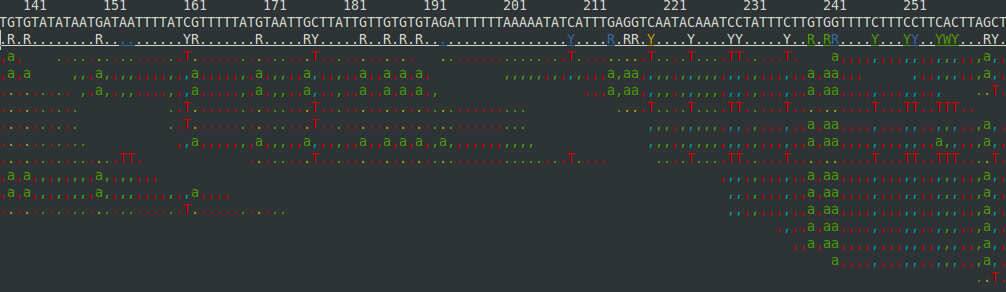

I've been playing with go in the evenings and over xmas break for about 8 weeks now. This post is about go the language and the tooling. I may write another post about a simple go package for bioinformatics that I've been writing which is under 1000 lines of code. First, go is boring , and though it is pretty terse, I do miss things about python like list comprehensions; initializing a variable and writing a for loop is easy enough, but it's one of the things that I use all the time in python. But, I can't argue with the "less is exponentially more" mantra as I was able to pick up the language very quickly. The tooling is fantastic. My project has dependencies that are wrappers to C-libs, but I can simply do: go get code.google.com/p/biogo.boom and it just works. The project is only about 1K lines of code, but it compiles in about 0.1 seconds on my laptop. And, when the time comes, I can distribute binaries for common platforms! vim-go is awesome...