Adding bed/wig data to dalliance genome browser

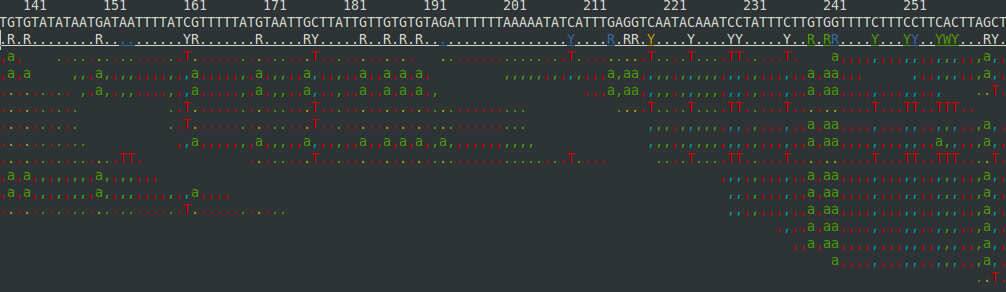

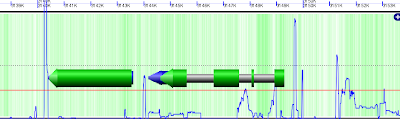

I have been playing a bit with the dalliance genome browser . It is quite useful and I have started using it to generate links to send to researchers to show regions of interest we find from bioinformatics analyses. I added a document to my github repo describing how to display a bed file in the browser. That rst is here and displayed in inline below. It uses the UCSC binaries for creating BigWig/BigBed files because dalliance can request a subset of the data without downloading the entire file given the correct apache configuration (also described below). This will require a recent version of dalliance because there was a bug in the BigBed parsing until recently. Dalliance Data Tutorial dalliance is a web-based scrolling genome-browser. It can display data from remote DAS servers or local or remote BigWig or BigBed files. This will cover how to set up an html page that links to remote DAS services. It will also show how to create and serve BigWig and BigBed files. N...