streaming merge of sorted objects

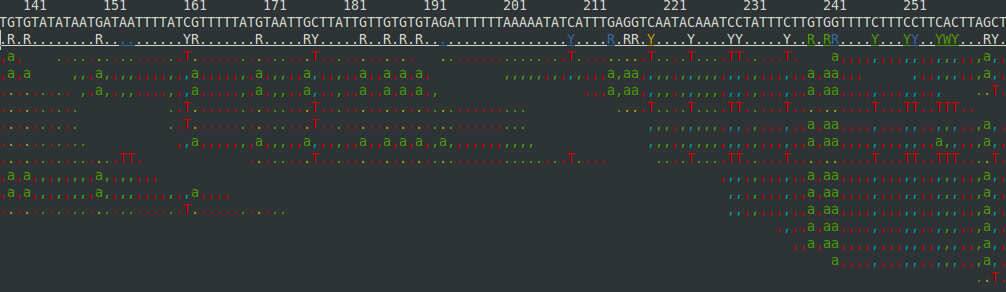

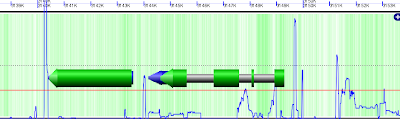

A lot of software still seems to rely on being able to read big-ish data into memory. This is not possible (or at least not desirable) for much of the data that I work with. There are very nice tools in python to allow operating on chunks of data at a time. When combined with a decent data-layout, this can be very powerful, and simpler even than reading everything into memory. This can change working on big(ish) data into something like working on small data. The output of a tool that I'm using is a file of genomic positions and a value. Something like: chr1 1234 0.9 chr1 1239 0.12 chr1 1249 0.12 That file is for a single sample and may contain about 10 million lines. That's not too much, but with 60 samples, this can become a problem. In addition, another sample may have sites that are not in that first sample: chr1 1221 0.91 chr1 1239 0.13 chr1 1259 0.22 Many softwares will take a matrix with rows of genomic positions and 1 column per sample (e.g. R's limma, pyt...